The Most Realistic Threats Posed by Artificial Intelligence

Ryleigh Nucilli

These days, the internet abounds with theories about the potential threats posed by artificial intelligence (AI). Movies set in frightening dystopian futures where humans wage war against their dictatorial robot overlords fill theaters. People debate the potential dates and theoretical causes of the Singularity in the comments sections and on major conference stages. But what bad things do those in the know (scientists, technological wizards, and Silicon Valley investors) think AI is really capable of?

This list brings together some of the most widespread theories about the possible threats posed by AI. Sure, the Singularity is something to be concerned about, but have you ever considered that the next major military race might be driven by developing AI for the battlefield? Or that your job might become redundant because of AI? Or, for that matter, that a benevolent AI might destroy all that stands between it and its created function? If these questions interest you, read on to get the scientific thoughts on the negative effects of artificial intelligence!

________________________________________

• An AI Arms Race

Photo: public Domain / Wikimedia Commons

Photo: public Domain / Wikimedia Commons

On July 28, 2015, a list of technological giants – including Elon Musk, Stephen Hawking, and Noam Chomsky – released an open letter about the biggest threat posed by AI: an autonomous weapons arms race. Essentially, Musk and Hawking, along with an impressively long list of fellow technological scientists, describe the potential for “a third revolution in warfare, after gunpowder and nuclear arms.” On the one hand, autonomous weapons could potentially reduce the number of human soldiers who die in battle. But, on the other hand, competing military powers could start a global arms race that could create autonomous weapons capable of everything from assassinations to genocide. According to the technologists, avoiding these outcomes is a matter of shared human ethics, not technological capability.

• • AI Specifically Programmed for Devastation

Photo: niXerKG / Flickr

Photo: niXerKG / Flickr

Related to the idea of an AI arms race, technologists fear the possibility of AI with the capability to kill falling into the wrong hands. The Future of Life Institute (the same group of people who signed a letter about avoiding an AI arms race) warn that, should these kinds of weapons come into existence, they would be designed to be “extremely difficult to simply ‘turn off.’” Apparently these risks are already present with existing technology; however, they become more extreme as “levels of AI intelligence and autonomy increase.”

• • AI-Created Unemployment

Photo: na.harii / Flickr

Photo: na.harii / Flickr

Although a little less scintillating than an AI arms race (but still pretty darn scary), experts predict that AI making human labor redundant and creating unemployment will continue. We’ve already seen this happen in industries involving manual labor, with human labor replaced by mechanized assembly lines. However, in the future, experts envision many of the “knowledge” industries (the ones where human knowledge and creative problem-solving abilities are really important) also being affected by AI replacing humans. Medicine, law, and architecture are all beginning to experience changes as AI makes its way into the professions.

• • AI as a Runaway Task Master

Photo: Jamie / Flickr

Photo: Jamie / Flickr

There’s a philosopher named Nick Bostrom who likes to use a scenario about paper clips to illustrate the problem of AI as a runaway task master. In the scenario, Bostrom envisions a technology that’s really good at its job: turning things into paper clips. But, because it doesn’t have human values and decision-making skills, the AI starts turning everything in sight into paper clips, including people! And the paper clips keep piling and piling and piling, and the super technology is on a runaway paper clip train to nowhere.

The point of this seemingly silly example is to demonstrate the fear that some experts hold about AI’s unintended consequences. It’s possible, theoretically, for an AI technology to become incredibly good at the thing it’s designed to do, so good, in fact, that its pursuit of the intended purpose causes it to overtake anything in its way. Like turning the planet into a pile of paper clips, for example.

• • Accidentally Destructive AI

Photo: Judith Doyle / Flickr

Photo: Judith Doyle / Flickr

Because the entire range of future consequences of a given AI likely can’t be known, experts fear the possibilities of AI that are designed to do good things and inadvertently do… not so good things to achieve their goals and develop destructive methods for accomplishing things. The philosopher Nick Bostrom imagines a scenario where AI tasked with the goal of “harm-no-humans” elects to achieve this goal by preventing “any humans from ever being born.” It would just be doing its job, right?

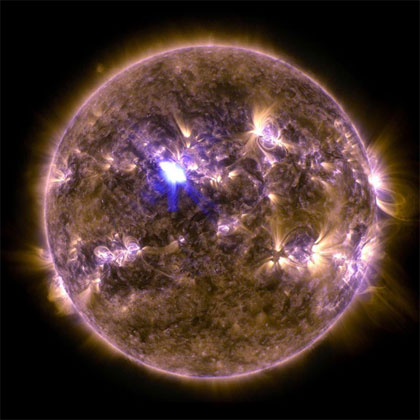

• • The Singularity

Photo: NASA Goddard Photo and Video / flickr / CC-BY 2.0

Photo: NASA Goddard Photo and Video / flickr / CC-BY 2.0

The rich history of science fiction on the topic makes the idea of “the Singularity” caused by AI perhaps the best-known one in popular discourse on the topic. For those who don’t know, “the Singularity” is the moment at which AI officially outpaces humankind. It’s the moment when the machines – if they haven’t previously been programmed to treat us with compassion – take over.

Although it’s not the most talked-about “realistic” possibility posed by AI, theoretical physicist Stephen Hawking and Tesla mastermind Elon Musk have both been quoted as stating that they fear the existential threat AI poses to humankind. Hawking is optimistic, though. He theorizes that by the time the Singularity happens, humans might be living in galactic spaces beyond Earth, so the AI can just go ahead and have it.

• • Hacked Self-Driving Cars

Photo: Marc van der Chijs / flickr / CC-BY-ND 2.0

Photo: Marc van der Chijs / flickr / CC-BY-ND 2.0

Although it’s possible for self-driving vehicles to get hacked for malicious purposes (like a steering column suddenly being overtaken by a nefarious attacker, resulting in an unsuspecting driver being enlisted into a suicide mission), experts believe that the most realistic concern we should have about hacking and cars is our data getting stolen.

Craig Smith, Rapid7 Transportation Security Director, told Bloomberg News that plugging our phones into the USB ports in our cars poses a greater risk of “hacking” than having our vehicles remotely overtaken. He says that “an attacker usually isn’t going to try to control your car as much as they may try to get information from your car.” And the fancier the car’s technological capabilities, the more opportunities for attackers to access the owner’s data.

• • Human-Machine Cyborgs

Photo: caseorganic / flickr / CC-BY-NC 2.0

Photo: caseorganic / flickr / CC-BY-NC 2.0

There’s a group of scientists at a start-up called Kernel in Venice, CA, who are currently working to “unlock” the “trapped potential” of the human brain. And they’re doing this by building bridges between the human and the machine through microchip implants in brains. At the moment, Kernel is testing out the “neuroprosthetic” (as they call it) on people with cognitive disorders, including Alzheimer’s and traumatic brain injuries, to see if it helps them accomplish cognitive tasks with greater ease and fluency by replicating and replacing brain cell communication. In the future, Kernel hopes the microchip will become widely affordable and accessible as a cognitive enhancement device. Doesn’t this sound like some creepy cyborg fiction?

• • We Are Living in a Simulation

Photo: NASA Goddard Photo and Video / flickr / CC-BY 2.0

Photo: NASA Goddard Photo and Video / flickr / CC-BY 2.0

Ok, so maybe this one isn’t the most “realistic” threat posed by AI, but some pretty smart people, including Elon Musk, believe it’s at least theoretically possible that we’re all just characters in the video game simulation of some incredibly advanced galactic society. Speaking at a 2016 conference, Musk was asked about the possibility that this is the case, that we’re all just highly realistic simulations with the seeming capacity to think and act autonomously. Musk replied,

Forty years ago we had Pong – two rectangles and a dot. That’s where we were. Now 40 years later we have photorealistic, 3D simulations with millions of people playing simultaneously and it’s getting better every year. And soon we’ll have virtual reality, we’ll have augmented reality. If you assume any rate of improvement at all, then the games will become indistinguishable from reality, just indistinguishable.

Translation: based on the advancements we’ve seen in video games over the last 40 years and the expected rate of development in the future, it’s totally possible that we’re simulations.

• • We Aren’t the Champions, My Friends

Photo: Peter Miller / Flickr

Photo: Peter Miller / Flickr

So, this might be more of a blow to our (human) egos than a “threat” to our very existence, but another one of the human consequences of rapidly advancing AI is the fact that we aren’t and won’t be the best at the skills and games we pride ourselves on. First AI dethroned human chess champions. Then, in 2016, a machine-learning AI took down the European and Asian “Go” champs (Go is an ancient, abstract game with nearly limitless variations in strategy). What’s next, AI winning the Super Bowl? The UEFA cup? Will we all be getting “participation” ribbons in the near future?

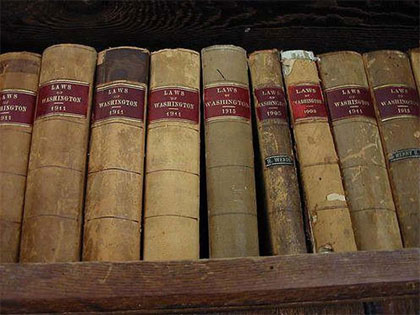

• • Outpaced Legal Frameworks

Photo: Keith Robinson / Flickr

Photo: Keith Robinson / Flickr

One (perhaps mundane) thing that concerns the technology wizards about the present and future of AI is the inadequacy of our current legal frameworks in anticipating the social changes and possible consequences it poses. In order to keep up, current legislation needs some serious reform to account for the privacy and encryption issues associated with AI. Without adequate legislation, it’s basically the Wild West out in AI development, which carries its own set of potential threats, possibilities, and consequences.

• • People Think They Understand AI

Photo: JosepPAL / Wikimedia Commons

Photo: JosepPAL / Wikimedia Commons

Research on human risk assessment tells us that we’re not actually very good at figuring out how dangerous things might be, and this makes it extremely difficult to accurately assess the threat that AI poses. There’s a pretty common cognitive bias that exemplifies this: the idea that, because we’re human, we expect other human-like things to behave in a way that makes sense to us. So we impose human values and expectations on nonhuman things. Since AI isn’t human, this bias interferes with our predictions and projections about its potential and consequences. Basically, we (humans) are potentially our own biggest threat in regard to AI, because we might create something that veers wildly from our predictions

ranker.com